Unlocking Anomaly Detection: Exploring Isolation Forests

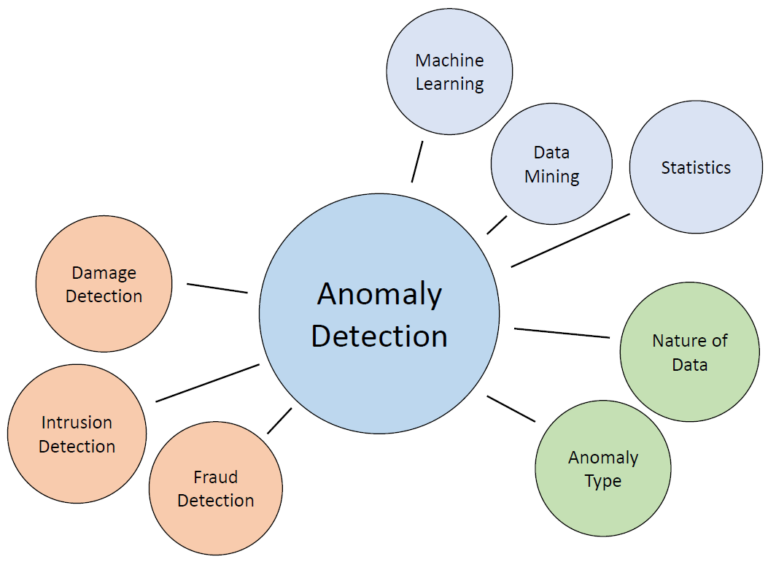

In the vast landscape of machine learning, anomaly detection stands out as a critical application with wide-ranging implications. One powerful tool in this domain is the Isolation Forest algorithm, known for its efficiency and effectiveness in identifying outliers in data. Let’s delve into the fascinating world of Isolation Forests and their role in anomaly detection.

Understanding Anomalies

Anomalies, also known as outliers, are data points that deviate significantly from the majority of the data. These anomalies can indicate critical information such as fraudulent transactions, network intrusions, or equipment malfunctions. Detecting these anomalies is crucial for maintaining the integrity and security of systems.

The Concept of Isolation Forests

Developed by Fei Tony Liu, Kai Ming Ting, and Zhi-Hua Zhou, Isolation Forests offer a unique approach to anomaly detection. The algorithm works by isolating anomalies in the data using binary trees, with anomalies being isolated in fewer steps than normal data points. This concept is based on the intuition that anomalies are ‘few and different’, making them easier to isolate.

Key Features and Advantages

- Scalability: Isolation Forests are highly scalable, making them suitable for large datasets with millions of data points.

- Insensitivity to Multicollinearity: Unlike other methods, Isolation Forests are not affected by multicollinearity in the data.

- Efficiency: The algorithm is efficient, with a low computational cost, making it ideal for real-time applications.

- Versatility: Isolation Forests can be used for both categorical and numerical data, making them versatile in various applications.

Application in Industry

Isolation Forests find applications in various industries, including cybersecurity, finance, and healthcare. In cybersecurity, they can detect unusual patterns in network traffic, while in finance, they can identify fraudulent transactions. In healthcare, they can help detect anomalies in patient data, aiding in early disease diagnosis.

Implementing Isolation Forests

Implementing Isolation Forests is straightforward using libraries such as scikit-learn in Python. With just a few lines of code, you can train a model to detect anomalies in your data.

Sample Code:

# Importing necessary libraries

from sklearn.ensemble import IsolationForest

import numpy as np

# Generating sample data

rng = np.random.RandomState(42)

X = 0.3 * rng.randn(100, 2)

X_train = np.r_[X + 2, X - 2] # Creating clusters of normal points

X_outliers = rng.uniform(low=-4, high=4, size=(20, 2)) # Creating some outliers

# Training the Isolation Forest model

clf = IsolationForest(random_state=42)

clf.fit(X_train)

# Predicting anomalies

y_pred_train = clf.predict(X_train)

y_pred_outliers = clf.predict(X_outliers)

# Printing the results

print("Inliers:\n", y_pred_train)

print("\nOutliers:\n", y_pred_outliers)

Conclusion

Isolation Forests offer a powerful and efficient solution for anomaly detection, with wide-ranging applications across industries. As the need for anomaly detection grows in an increasingly digital world, Isolation Forests stand out as a valuable tool in the machine learning toolkit.

References:

- Liu, Fei Tony, Ting, Kai Ming, and Zhou, Zhi-Hua. “Isolation Forest.” Data Mining, 2008.