Exploring the Statistical Foundations of ARIMA Models

By Kishore Kumar K

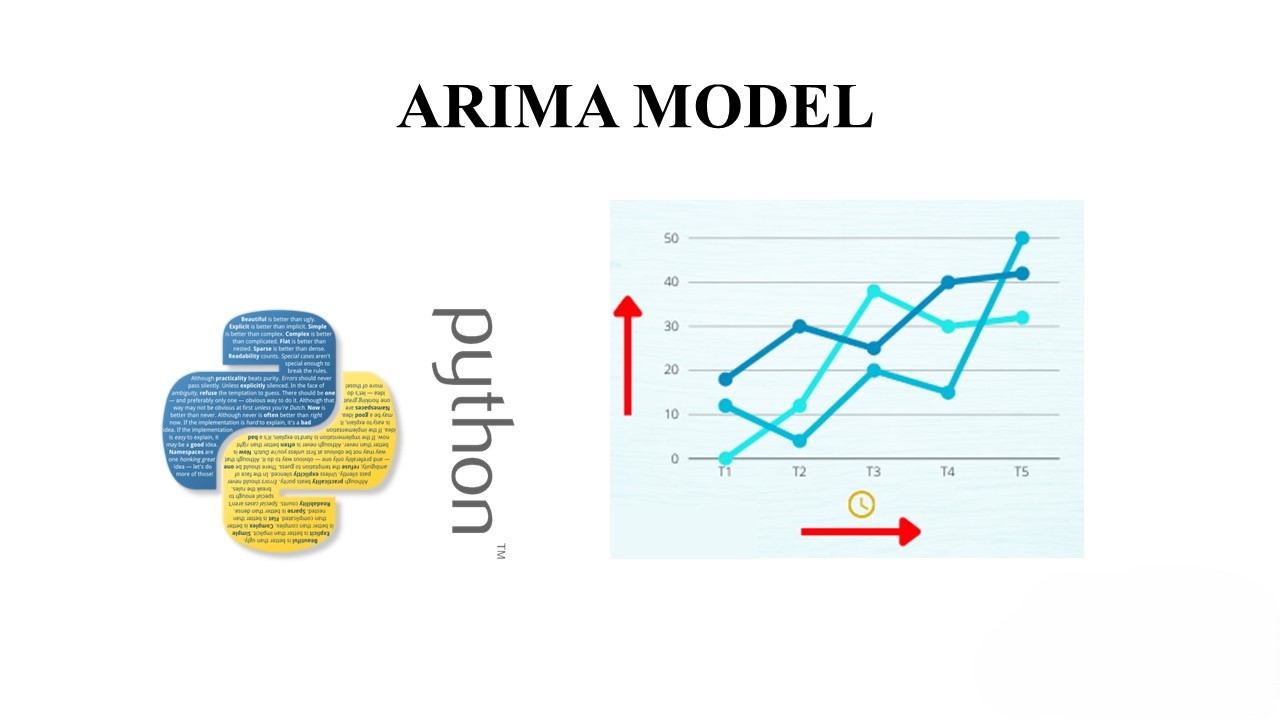

In the realm of time series analysis, ARIMA (AutoRegressive Integrated Moving Average) models stand out as a powerful tool for forecasting. Understanding the statistical concepts behind ARIMA can greatly enhance your ability to leverage this model effectively.

AutoRegressive (AR) Component:

The AR part of ARIMA signifies that the evolving variable of interest is regressed on its own lagged (i.e., prior) values. The AR parameter p determines the lag order, indicating how many lagged terms are included in the model. This component captures the linear relationship between the variable and its own lagged values.

Integrated (I) Component:

The I in ARIMA represents the differencing of raw observations to make the time series stationary. Stationarity is crucial because many time series forecasting methods assume that the underlying time series is stationary. Differencing involves subtracting the current value from the previous one, effectively removing trends or seasonality.

Moving Average (MA) Component:

The MA part involves modeling the error term as a linear combination of error terms occurring contemporaneously and at various times in the past. The MA parameter q determines the order of the MA process, indicating the number of lagged forecast errors in the prediction equation.

Order of Differencing (d):

The order of differencing (d) is the number of times the differencing operation is applied to the time series to achieve stationarity. This parameter captures the number of lagged differences needed to make the series stationary.

Model Identification:

Identifying the appropriate orders (p, d, q) for an ARIMA model is a crucial step. This process often involves analyzing autocorrelation and partial autocorrelation plots to determine the p and q parameters and applying differencing to achieve stationarity (d).

Estimation and Forecasting Once the ARIMA parameters are identified, the model is estimated using methods like maximum likelihood estimation. The model can then be used for forecasting future values of the time series.

Sample Code:

ARIMA Model for Time Series Forecasting

Here’s a simple example of how to build an ARIMA model in Python using the statsmodels library:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from statsmodels.tsa.arima.model import ARIMA

from statsmodels.tsa.stattools import adfuller

# Load the dataset

data = pd.read_csv('your_time_series_data.csv')

# Check for stationarity

result = adfuller(data['value'])

print('ADF Statistic:', result[0])

print('p-value:', result[1])

# Differencing to make the series stationary

data['diff'] = data['value'].diff()

# Fit ARIMA model

model = ARIMA(data['value'], order=(2,1,2))

model_fit = model.fit()

# Forecast

forecast = model_fit.forecast(steps=10)

# Plotting

plt.plot(data['value'], label='Original Series')

plt.plot(data['value'].iloc[-1:].append(forecast), label='Forecasted Series')

plt.legend()

plt.show()Conclusion:

ARIMA models provide a robust framework for time series forecasting, leveraging concepts from auto-regression, differencing, and moving averages. By understanding the statistical foundations of ARIMA, practitioners can better interpret the results and make informed decisions in their forecasting endeavors.