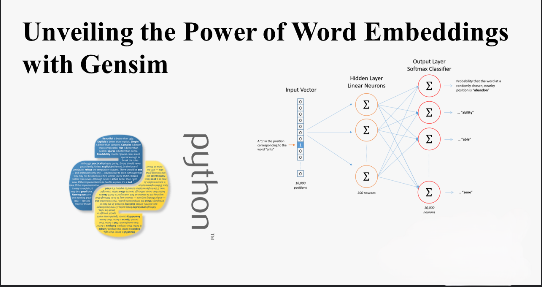

Unveiling the Power of Word Embeddings with Gensim

In the realm of Natural Language Processing (NLP), word embeddings have emerged as a game-changer. Unlike traditional approaches that use words as features, word embeddings leverage dense, low-dimensional vectors to capture the meaning and usage of a word. One pioneering model in this domain is Word2Vec, developed by Thomas Mikolov and team at Google. In this blog post, we’ll delve into the world of word embeddings using the original Word2Vec approach, implemented with the Gensim library.

Training Word Embeddings

Training word embeddings with Gensim is a breeze. All you need is a corpus of sentences in the language of interest. For our exploration, we’ll use 5,000,000 sentences from Dutch Wikipedia. Let’s jump into the code:

import os

import gensim

class SentenceCorpus(object):

def __init__(self, filename):

self.filename = filename

def __iter__(self):

with open(self.filename, "r") as i:

for line in i:

tokens = line.strip().split()

yield tokens

WIKI_FILE = os.path.join("../data", "nlwiki_20170620_tok_small.txt")

sentences = SentenceCorpus(WIKI_FILE)

model = gensim.models.Word2Vec(sentences, min_count=100, window=5, size=100)

Using Word Embeddings

Now that we have our embeddings trained, let’s explore their capabilities. We can access the embeddings using the wv attribute of the model. For instance:

# Retrieving the embedding for the word "koning" (king) king_embedding = model.wv["koning"]We can also measure the similarity between two words:

similarity_king_queen = model.wv.similarity("koning", "koningin") # Expected: high similarity_king_coffee = model.wv.similarity("koning", "koffie") # Expected: lowFurthermore, finding words most similar to a target word is straightforward:

similar_words_to_king = model.wv.similar_by_word("koning", topn=10)The model even allows us to explore analogies:

analogy_result = model.wv.most_similar(positive=['vrouw', 'koning'], negative=["man"], topn=10)Visualizing Embeddings

Visualizing embeddings in a high-dimensional space can be challenging. We use t-distributed Stochastic Neighbor Embedding (t-SNE) to map the embeddings to a 2D space for visualization:

import matplotlib.pyplot as plt

from sklearn.manifold import TSNE

target_word = "belgië"

selected_words = [w[0] for w in model.wv.most_similar(positive=[target_word], topn=200)]

embeddings = [model.wv[w] for w in selected_words]

mapped_embeddings = TSNE(n_components=2, metric='cosine', init='pca').fit_transform(embeddings)

# Plotting the 2D embeddings

plt.scatter(mapped_embeddings[:, 0], mapped_embeddings[:, 1])

# Annotating words on the plot

for i, txt in enumerate(selected_words):

plt.annotate(txt, (mapped_embeddings[i, 0], mapped_embeddings[i, 1]))

plt.show()

Exploring Hyperparameters

Choosing the right hyperparameters is crucial. We evaluate the impact of embedding size and context window:

sizes = [100, 200, 300]

windows = [2, 5, 10]

for size in sizes:

for window in windows:

model = gensim.models.Word2Vec(sentences, min_count=100, window=window, size=size)

acc = evaluate(model, word2pos)

df[size][window] = acc

The results suggest that smaller contexts tend to work better, and 200-dimensional embeddings strike a balance.

Clustering Embeddings

Clustered embeddings can be valuable for tasks like Named Entity Recognition. We use agglomerative clustering and save the clusters to a file:

from sklearn.cluster import AgglomerativeClustering

from sklearn.preprocessing import normalize

vocab = list(model.wv.vocab)

vectors = [model.wv[w] for w in vocab]

vectors_norm = normalize(vectors)

clusterer = AgglomerativeClustering(n_clusters=500)

clusters = clusterer.fit_predict(vectors_norm)

# Save clusters to a file

with open("data/clusters_nl.tsv", "w") as o:

for c in cluster_dictionary:

for w in cluster_dictionary[c]:

o.write(f"{w}\t{c}\n")

Conclusion

Word embeddings open up exciting possibilities in NLP, allowing us to model word meanings and discover semantic relationships. Gensim’s Word2Vec implementation empowers us to navigate this landscape effortlessly. From training embeddings to visualizing and fine-tuning, word embeddings offer a rich playground for language exploration.

In future experiments, we’ll leverage these embeddings for Named Entity Recognition and other advanced NLP tasks. Stay tuned for more insights into the fascinating world of word embeddings!